AI Business Elements 101: LLMs, Copilots, and Agents

iStock Photo | Credit: metamorworks

This article first appeared in Medium.

If I’m being honest (and I am), I’m writing this article to benefit you and me. Keeping up with the terms and buzzwords that make up the Generative AI ecosystem can be daunting — and that’s coming from someone who reads and listens to podcasts nearly every day on this very topic! While I may know more about this topic than the average person, I can admit that it’s still only enough to be dangerous. Loosely understanding and discussing these concepts with colleagues and stakeholders will only get you so far. As I wrote before, it’s critical for your teams to be working from the same set of definitions and understanding.

More importantly, your business must have a solid understanding of these concepts. Some of them have been ubiquitous in the past year. Some are about to become ubiquitous. They all will be a factor as organizations try to evolve/transform/reinvent themselves in response to the advances in AI. So, let’s dive in.

Large Language Models (LLMs), Conversational AI, Custom AI Assistants, AI Copilots, and Agentic AI… are just some of the main terms that you will come across in any article or conversation about Generative AI, business adoption and the future of the human race (not even a real exaggeration if you pay attention to the doomsayers, but I digress).

It can be challenging to know where one of these concepts ends and the next one begins, especially when everyone has a slightly (or even significantly) different definition of each. But fret not; we’ll explore each one using a simplified analogy: a library where books, librarians, research assistants, and more will all represent these generative AI concepts.

LLMs — Endless Shelves of Books in a Library

Photo by Susan Q Yin on Unsplash

LLMs are AI models trained on enormous datasets to generate text, answer questions, and analyze information. Think of LLMs as an impossibly extensive library with vast quantities of books, shelves, maps, periodicals, and other forms of media in a massive and magnificent structure. In this case, you, the “library patron,” must know how to find it. However, finding the information is both an art and a science when the knowledge source is as deep and wide as an ocean.

But if you’re old-school and prefer just a straight-shot, formal definition free of analogy, here you go:

A large language model (LLM) is a deep learning AI system trained on massive amounts of text data to understand and generate human-like language. It uses neural networks to process and predict text, enabling it to perform various natural language processing tasks such as translation, summarization, and content generation (Perplexity, 2025).

Examples of LLM models include Open AI’s Chat GPT-4o, Anthropic’s Claude 3 Sonnet, and Google’s Gemini 1.5 Pro. It’s a little tricky and nuanced, but know that the underlying models I just listed aren’t the same as the interface that these companies build on top of them. You can access these models through a conversational UI such as a Website/mobile app (see the next section) or directly through an API.

Either way, LLMs form the foundational knowledge source for other Generative AI concepts and capabilities. If LLMs represent the books and knowledge in the library, then conversational AI is like the librarian.

Conversational AI — Your Friendly and Knowledgable Librarian

If LLMs are all of the books and knowledge artifacts in the library, then conversational AI is the librarian you can “interface” with to help find the knowledge you seek. You can ask the librarian a question and have a back-and-forth conversation with them until you are confident in the answer and your understanding. It is one of the most powerful aspects of this technology — the ability for human-style dialog and interaction.

However, conversational AI is a broad term; not all conversational AI tools leverage an LLM. For instance, chatbots can be considered conversational AI. But many traditional chatbots are simply rule-based with pre-written responses and limited interaction capabilities — think a work-study student or an intern who may have limited knowledge (and usefulness) depending on the question. Other chatbots use natural language processing (NLP) and are context-aware, meaning their answers are more conversational.

Again, for those of you who may prefer a more straightforward definition of what Conversational AI is:

Conversational AI is an AI technology that enables machines to engage in human-like dialogue, understanding and responding to user inputs in a natural, interactive manner. In the context of generative AI, conversational AI refers to large language models that can engage in open-ended dialogue by generating original responses based on their training rather than selecting from pre-written answers (Claude [Anthropic], 2025).

Examples of Conversational AI include the web and mobile apps that serve as the front-end experience for major LLMs: OpenAI’s ChatGPT, Anthropic’s Claude, Google’s Gemini, and Perplexity, to name a few. For most people, the distinction between the LLM and the application that enables interaction (e.g., ChatGPT) is blurry — they are often perceived as one and the same. Technically, the LLM is the underlying model, while the application provides the conversational UI.

Other examples of conversational AI include emerging chatbot platforms designed for customer support, such as Zendesk or Intercom. Many companies are entering this space weekly, focusing on automating communication and improving user experiences. (Pro tip: You can even use some LLM-powered tools, like Perplexity to explore these trends further!)

To recap, LLMs represent all of the library's content and knowledge. Conversational AI is the librarian generalist who “sits in front of the library” and can answer questions and converse with library patrons. Now, let’s get deeper and explore more specialized roles in the Generative AI ecosystem.

AI Copilots & Custom AI Assistants — The Research Specialists of Your AI Library

Think of AI Copilots and Custom AI Assistants as library employees who specialize in particular areas — acting as research assistants with advanced training to make your search for knowledge more efficient.

While similar, they serve different purposes. Custom AI Assistants are like general research assistants with an advanced degree in library science. They’re knowledgeable across a broad set of topics but lack deep expertise in any one area, like a PhD in Victorian literature. Flexible and task-oriented, Custom AI Assistants can be configured for specific topics or workflows but aren’t deeply integrated with software or specialized use cases.

Custom AI Assistants are specialized versions of conversational AI models configured to serve specific purposes or domains. They are instances of base AI models (like GPT-4, Claude, or Gemini) that have been customized with specific instructions, knowledge, and capabilities to focus on particular tasks, topics, or user needs. (Claude [Anthropic], 2025).

For this article, “Custom AI Assistants” refers to tailored solutions different from the generic “AI assistants” like Alexa, Siri, or Google Assistant. Custom AI Assistants can be built using platforms such as OpenAI’s Custom GPTs, Claude Projects, or Gemini Gems, enabling individual employees, teams or businesses to adapt AI behavior to their unique needs.

AI Copilots, by contrast, specialize in deep expertise. Think of them as a research assistant with a PhD in a specific field (e.g., a PhD in Victorian Period Literature), offering practical, detailed guidance on a particular topic or use case. They are typically embedded in specific software platforms to enhance workflows. Microsoft Copilot, integrated into Office applications, is a leading example — but more Copilots are emerging weekly to serve specialized tasks and workflows.

AI Copilots are integrated AI tools that work directly within software applications and workflows to enhance user productivity and capabilities. They operate alongside users within specific environments (like coding platforms, productivity software, and operating systems) to provide contextual assistance and automate tasks (Claude [Anthropic], 2025).

In summary, while Custom AI Assistants go wide but not deep, AI Copilots go deep but not wide. Both augment Generative AI for specific workflows and use cases. Now, let’s explore the next evolution of these concepts: AI Agents.

AI Agents — The Autonomous Library-in-Action

Photo by Hermann Mayer on Unsplash

AI Agents stretch the library analogy to its limits, but let’s keep running with it. Imagine, if you will, an entirely autonomous modern library. You walk in, and the library recognizes you as you scan your card at the entrance. A digital kiosk greets you by name as you walk up, suggests books and periodicals based on your past checkouts, and notices your MN Vikings sweatshirt, recommending sports periodicals — and a book about coping with disappointment.

The kiosk also detects that you’re with your 10-year-old daughter and suggests kid-friendly titles. After you choose books on the touchscreen, it asks if you have any upcoming trips. When you mention a vacation to the Canadian Rockies, it recommends travel guides and hiking books. Your selected items are automatically sent to the checkout desk, ready for pickup as you head out the door.

While this scenario is futuristic (and perhaps even a little dystopian depending on your point of view), it illustrates how AI Agents could transform workflows. Here’s a more formal definition for AI Agents — broadly referred to as Agentic AI.

AI agents are software entities that autonomously perceive their environment, make decisions, and take actions to achieve specific goals. They utilize data to analyze situations, learn from experiences, and adapt their behavior over time. This enables them to perform a variety of tasks across industries, enhancing efficiency and streamlining processes. AI agents can range from basic automated systems to advanced applications that mimic human-like interactions and decision-making. (Perplexity, 2025)

In this scenario, large aspects of the library are running itself — retrieving knowledge, answering questions, conducting research, and making decisions autonomously. That’s the power of AI Agents. However, this doesn’t mean removing humans from the equation entirely. A librarian might not be needed for routine tasks but could instead focus more time and energy on high-touch interactions instead.

The benefits of Agentic AI are vast. By automating repetitive tasks, they free employees to focus on complex, high-value work, improve customer experiences, and enable businesses to scale in critical areas. Early examples of AI agents are emerging, with platforms like Salesforce’s Agentforce, CrewAI, and RelevanceAI just a few that are paving the way.

Agentic AI Is Coming

That doesn’t need to sound as ominous as it might. AI Agents will generally be a good thing, though many roles may look drastically different in the future. There will be growing pains, and your company might not know where to begin. While this topic warrants an entire article (or series), I previously touched on it in my article about AI Transformation, where I outlined a crawl-walk-run approach to adopting generative AI (what I called “Intelligent Transformation”).

Here’s the TL;DR version:

Crawl: Achieve individual and team productivity gains using LLMs and Conversational AI tools.

Walk: Unlock domain-specific opportunities and capabilities by creating or using Custom AI Assistants and Copilots.

Run: Enable organization-level transformation by integrating these tools and adding Agentic AI into your Intelligent Transformation strategy.

While I present these as sequential steps, progress isn’t strictly linear. You can experiment with solutions from multiple phases simultaneously. Start by enabling employees to access LLMs and Conversational AI tools, but meaningful transformation will take shape as you focus on domain-specific workflows, such as customer support, engineering, or finance. AI Agents will likely deliver the most immediate value and ROI in these “vertical” use cases.

Empower your teams to engage in the crawl, walk, and run phases as outlined above (see my article, The New (Intelligent) Transformation: A Guide for AI Adoption). Equally important is creating a thoughtful, intentional strategy to augment your workforce — not just replace it. There will naturally be anxiety about job displacement. For intelligent transformation to succeed at your company, your employees must see themselves as part of the journey and buy into the vision, not view AI adoption as a slow march toward eliminating their roles.

Final Thoughts

Generative AI has many elements and layers that work together, like the different components of a library, to put knowledge at the fingertips of your employees— each element is vital in making knowledge accessible, actionable, and transformative. Large Language Models are the books, Conversational AI is the friendly librarian guiding you, Custom AI Assistants and Copilots are the specialized researchers with specialized expertise, and AI Agents represent the future: an autonomous, intelligent system capable of running aspects of the library... err, your company’s workflows themselves.

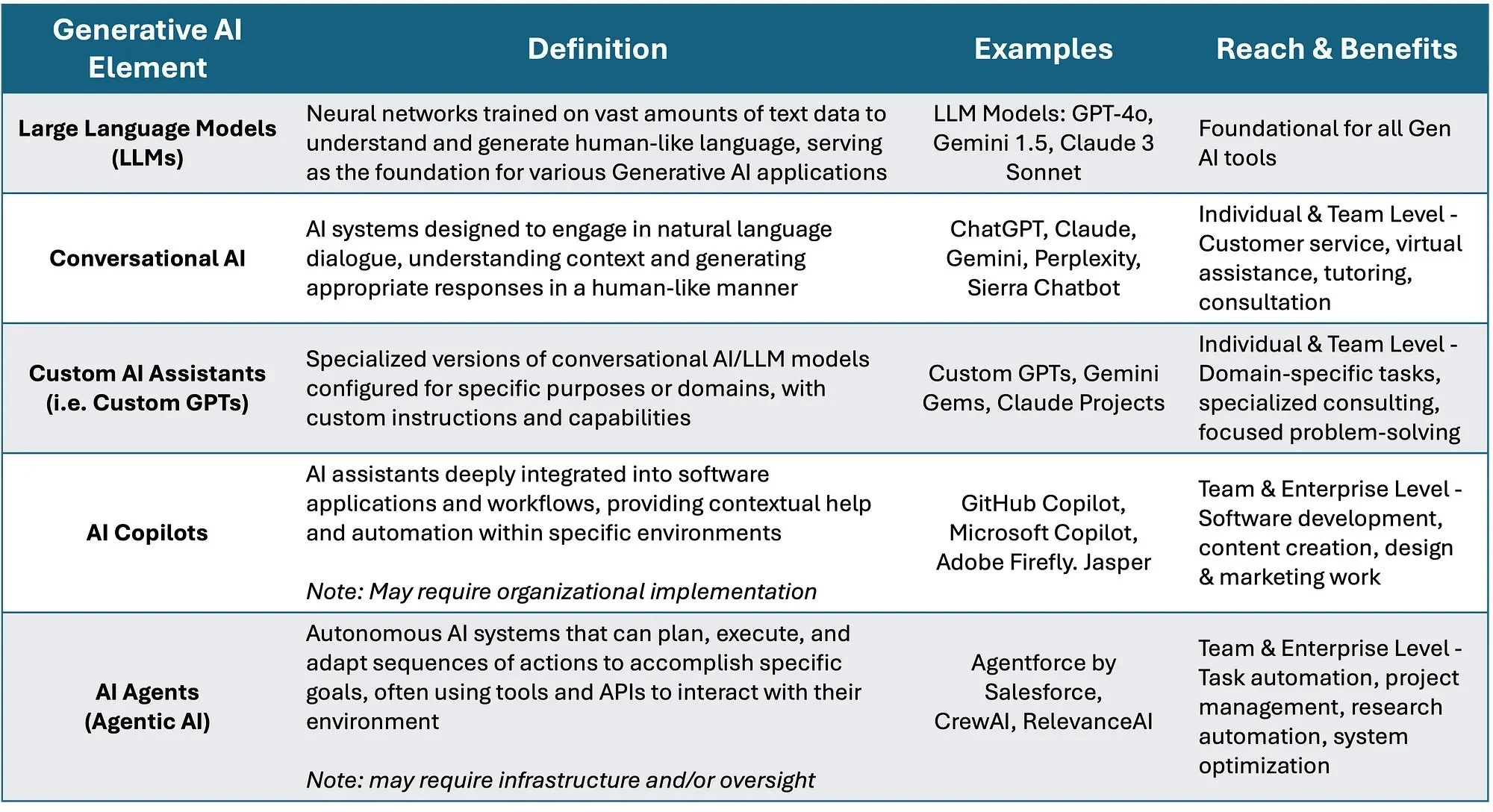

Cheatsheet Table of the Generative AI Elements Covered in this Article

This article is meant to simplify these concepts so you can move beyond buzzwords and begin to understand how you integrate them strategically into your business. Start small with tools like LLMs and Conversational AI, enhance workflows with Custom AI Assistants and Copilots. Explore domain-specific opportunities with AI Agents, which is a more advanced concept but will unlock even greater potential by automating complex, multi-step processes.

Adopting these technologies is not about replacing jobs — it’s about augmenting your workforce and empowering teams to focus on higher-value work. Take it one step at a time: experiment, iterate, and align AI with your strategic goals. The AI revolution is here, and it’s your turn to write the next chapter of your organization’s story.